实现过程,借鉴了:

https://github.com/TsMask/face-api-demo-vue

https://blog.csdn.net/xingfei_work/article/details/76166391

模型下载请到 https://github.com/TsMask/face-api-demo-vue

环境配置

npm i face-api.js

import * as faceapi from "face-api.js";

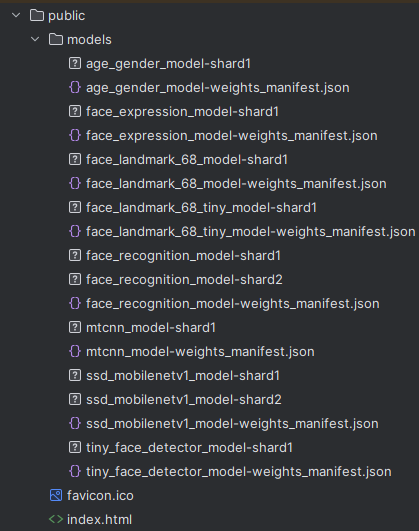

然后需要将模型文件夹 model/ 拷贝到项目的 public 目录下:

代码实现

HTML结构

<el-dialog

title="Face Recognition"

:visible.sync="dialogVisible"

width="50%"

>

<div style="display: flex;position: relative;height: 400px">

<video ref="video" style="position: absolute;top: 50%;left: 50%;transform: translate(-50%,-50%)" width="400" height="300" autoplay></video>

<!-- canvas浮于video之上,用来显示识别框 -->

<canvas style="position: absolute;top: 50%;left: 50%;transform: translate(-50%,-50%)"

ref="canvas"

width="300"

height="300"

></canvas>

</div>

<span slot="footer" class="dialog-footer">

<el-button @click="dialogVisible = false">Cancel</el-button>

</span>

</el-dialog>

数据定义

state: {

/**初始化模型加载 */

netsLoadModel: true,

/**算法模型 */

netsType: "ssdMobilenetv1",

/**模型参数 */

netsOptions: {

ssdMobilenetv1: undefined,

tinyFaceDetector: undefined,

mtcnn: undefined,

},

/**目标图片数据匹配对象 */

faceMatcher: {},

/**目标图片元素 */

/**识别视频元素 */

discernVideoEl: null,

/**识别画布图层元素 */

discernCanvasEl: null,

/**绘制定时器 */

timer: 0,

},

dialogVisible: false,

targetImg: "",

recogFlag: false,

模型加载

/**初始化模型加载 */

async fnLoadModel() {

// 面部轮廓模型

await faceapi.loadFaceLandmarkModel("/models");

// 面部识别模型

await faceapi.loadFaceRecognitionModel("/models");

// 模型参数-ssdMobilenetv1

await faceapi.nets.ssdMobilenetv1.loadFromUri("/models");

this.state.netsOptions.ssdMobilenetv1 = new faceapi.SsdMobilenetv1Options(

{

minConfidence: 0.3, // 0.1 ~ 0.9

}

);

// 模型参数-tinyFaceDetector

await faceapi.nets.tinyFaceDetector.loadFromUri("/models");

this.state.netsOptions.tinyFaceDetector =

new faceapi.TinyFaceDetectorOptions({

inputSize: 224, // 160 224 320 416 512 608

scoreThreshold: 0.5, // 0.1 ~ 0.9

});

// 模型参数-mtcnn 已弃用,将很快被删除

await faceapi.nets.mtcnn.loadFromUri("/models");

this.state.netsOptions.mtcnn = new faceapi.MtcnnOptions({

minFaceSize: 20, // 1 ~ 50

scaleFactor: 0.56, // 0.1 ~ 0.9

});

this.state.discernVideoEl = this.$refs["video"];

this.state.discernCanvasEl = this.$refs["canvas"];

// 关闭模型加载

this.state.netsLoadModel = false;

if (this.targetImg) {

// 拿到需要对比的图片路径,创建成DOM

const targetFaceDOM = document.createElement("img");

targetFaceDOM.src = this.targetImg;

targetFaceDOM.crossOrigin = "anonymous";

const detect = await faceapi

.detectAllFaces(

targetFaceDOM,

this.state.netsOptions[this.state.netsType]

)

// 需引入面部轮廓模型

.withFaceLandmarks()

// 需引入面部识别模型

.withFaceDescriptors();

console.log("detect:", detect);

if (!detect.length) {

this.state.faceMatcher = null;

return;

}

// 原图人脸矩阵结果

this.state.faceMatcher = new faceapi.FaceMatcher(detect);

console.log(this.state.faceMatcher);

}

setTimeout(() => this.fnRedrawDiscern(), 300);

},

获取摄像头视频流

callCamera() {

let videoObj = { video: true };

var errBack = function (error) {

console.log("Video capture error: ", error.code);

};

// 这个是最新版浏览器获取视频流的方法

// 如果不需要兼容旧浏览器只写这一个方法即可

if (navigator.mediaDevices.getUserMedia) {

// Standard

navigator.mediaDevices.getUserMedia(videoObj).then((stream) => {

this.$refs["video"].srcObject = stream;

this.$refs["video"].play();

// 视频流获取成功后加载模型,并在模型加载完成后开始进行检测

this.fnLoadModel().then(() => this.fnRedrawDiscern());

console.log("摄像头开启");

});

} else if (navigator.getUserMedia) {

// WebKit-prefixed

navigator.webkitGetUserMedia(

videoObj,

function (stream) {

this.$refs["video"].src = window.URL.createObjectURL(stream);

this.$refs["video"].play();

this.fnLoadModel().then(() => this.fnRedrawDiscern());

},

errBack

);

} else if (navigator.webkitGetUserMedia) {

// WebKit-prefixed

navigator.webkitGetUserMedia(

videoObj,

function (stream) {

this.$refs["video"].src = window.URL.createObjectURL(stream);

this.$refs["video"].play();

this.fnLoadModel().then(() => this.fnRedrawDiscern());

},

errBack

);

} else if (navigator.mozGetUserMedia) {

// Firefox-prefixed

navigator.mozGetUserMedia(

videoObj,

function (stream) {

this.$refs["video"].mozSrcObject = stream;

this.$refs["video"].play();

this.fnLoadModel().then(() => this.fnRedrawDiscern());

},

errBack

);

}

},

关闭摄像头

this.$refs["video"].srcObject.getTracks()[0].stop();

执行检测

/**根据模型参数识别绘制 */

async fnRedrawDiscern() {

if (!this.state.faceMatcher) return;

console.log("Run Redraw Discern");

// 暂停视频时清除定时

if (this.state.discernVideoEl.paused) {

clearTimeout(this.state.timer);

this.state.timer = 0;

return;

}

// 识别绘制人脸信息

const detect = await faceapi

.detectAllFaces(

this.state.discernVideoEl,

this.state.netsOptions[this.state.netsType]

)

// 需引入面部轮廓模型

.withFaceLandmarks()

// 需引入面部识别模型

.withFaceDescriptors();

// 无识别数据时,清除定时重新再次识别

if (!detect) {

clearTimeout(this.state.timer);

this.state.timer = 0;

this.fnRedrawDiscern();

return;

}

// 匹配元素大小

const dims = faceapi.matchDimensions(

this.state.discernCanvasEl,

this.state.discernVideoEl,

true

);

const result = faceapi.resizeResults(detect, dims);

result.forEach(({ detection, descriptor }) => {

// 最佳匹配 distance越小越匹配

const best = this.state.faceMatcher.findBestMatch(descriptor);

// 识别图绘制框

const label = best.toString();

// 对比出来的结果,如果匹配则为person 1,如果不匹配则为Unknown

if (label.includes("person 1")) {

this.$message.success("Recognition successful!");

this.recogFlag = 1; // 识别成功标志位,下次不再启动识别

setTimeout(() => {

this.$refs["video"].srcObject.getTracks()[0].stop(); // 关闭摄像头

this.$router.push("/home"); // 执行跳转

}, 1000); // 延时一秒

} else {

}

new faceapi.draw.DrawBox(detection.box, {label}).draw(

this.state.discernCanvasEl

);

});

// 定时器句柄

// 如果还没有识别成功就继续识别

if (!this.recogFlag)

this.state.timer = setTimeout(() => this.fnRedrawDiscern(), 100); // 检测间隔 100MS

},